Did you know that companies that prioritize effectively reduce costs by 15%? By cutting out unnecessary items and focusing on optimum deliverables, businesses perform better.

It sounds obvious, right? But, how do you begin those prioritizations in the first place? If you’re a business trying to launch a new product or service, what do you prioritize? Which features or product bundles make the cut?

You don’t need to wonder. You can collect a little data and find out. With MaxDiff research, a method that forces preference selection, businesses enjoy an objective read on what customers really wants…and therefore how to best-spend company resources.

What Is MaxDiff Research

MaxDiff is a research method that quantifies customer preference and therefore leads to objective prioritizations. At its core, it’s an approach that makes survey respondents pick the ‘best’ and ‘worst’ set of outcomes from a list of options. With enough data from enough respondents, you can see what the market really wants. And, you can also see what they don’t want.

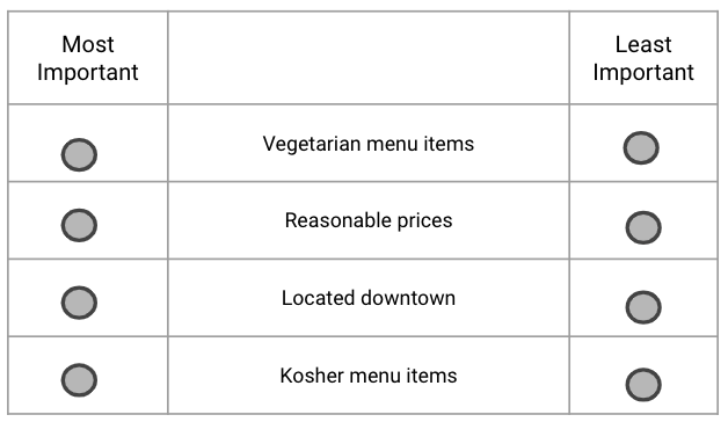

Let’s look at how we do this via survey research. In the box to the right, you see exactly what a survey respondent sees.

First, there’s a prompt. Something like, “Select the most and least important feature when selecting a restaurant.” Respondents then choose from the list. When the respondent hits “Next” on the survey, the screen refreshes with a new set of features. They once again choose the most and least important restaurant feature.

Each respondent does this process about 5-8 times. In a study with 200 or more participants, that means a lot of preference data to really understand what customers want.

You might be wondering, “Can’t I just have people rank their preferences?” Sure, you can. However, forcing people to rank a bunch of items against each other has several downsides.

- Mental Burden: Ranking a lot of things at the same time is tough. Respondents get overwhelmed, sometimes leading them to thoughtlessly rank items just to be done. MaxDiff limits how many items are ranked at any given time, making it much more manageable.

- Number of Items: Ask people to rank too many things at the same time and they can’t keep track of it all. It means ranked questions generally stop at 8-10 items total. In contrast, MaxDiff research studies only shows a few options at a time. As a result, you can test upwards of 30 different items.

- Unimportance: Just because you rank something last doesn’t mean it’s not important. And yes, when we force people to rank a set of items, we implicitly assume this is the case. MaxDiff lets respondents say items are important…even if they select them as “least important.”

Now, we’re not saying don’t include ranking questions in your surveys. They do have utility. Rather, we’re saying MaxDiff offers a more nuanced way to read preference.

How To Run A MaxDiff Study

Ready to plan your MaxDiff study? This is done through an online, survey-based approach. Here are the steps you’ll need to hit the ground running.

1. Develop Your Set Of Features

First off, develop the list of features you want to test. This should include everything from the features you think are necessary for an MVP to those you think are less relevant. After all, this is chance to test your hypotheses and see if customers actually want what you think they want.

Create enough features that you give respondents a chance to really compare and contrast options. But, not so many that it’s overwhelming. Our general rule of thumb is something between 12 to 20 features.

2. Determine Who You Want In Your Study

Think about the profile of who you want to study. What must be true about someone to make them a potential customer? This is usually a mix of demographics and psychographics and drives the survey’s screener questions. That is, the questions used at the start of a survey to make sure you get the right people into your study.

3. Isolate Relevant Sub Groups Among Respondents

While you have a target customer, you likely have key segments within that broader customer base. It’s often interesting to see if those segments react differently to the same questions or feature options. Plan those segments in advance so you have survey questions that let you slice and dice your data accordingly.

4. Identify Related, Relevant Questions To Ask

We like to get the most bang for the buck with any research project. That means do more than just the MaxDiff. Think about other questions you might want to ask. This could be around category purchase drivers, barriers to purchase, or other related ideas.

5. Draft Your Survey

Before you get your survey programmed out, you’ll want to write it out first. By creating a written draft, you can better evaluate its flow and see if it makes sense. Also, it lets you share the draft with team members to gather and incorporate their feedback.

6. Program Your Survey

Once you finalize the survey, it’s time to program it. We use a digital survey platform that lets us both ask standard survey style questions as well as program the MaxDiff portion.

7. Collect Responses

With your survey programmed, you’re ready to recruit respondents and collect data. There’s no hard and fast rule about how many respondents you need. That’s usually a function of how many features you are testing. Generally, we look to recruit 300-400 respondents. Often more if we need to analyze data across a lot of segments.

There are two typical ways we go about recruiting respondents:

- Customer Database: If you have a large database of customers or leads, that’s one great way to get respondents. However, remember that this is a biased group that already knows about your product. This can be a pro or a con, depending on what you’re looking to test.

- Research Panels: A second option is leveraging research panels. These are companies that specialize in collecting large databases of customers open to taking surveys or doing interviews.

8. Analyze Results

Once your data is in, it’s time to look at the results.

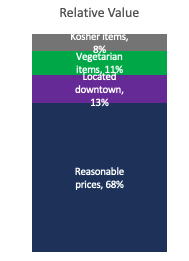

The graph at the right shows the greatest strength of MaxDiff research studies: visualizing the relative importance of different features. It’s completely free of scale bias and lets you isolate how much different features impact purchase decisions.

And, you can also do a TURF analysis, a statistical method that identifies the best mix of features to appeal to the greatest number of customers.

Of course, don’t forget to do your analysis by each unique customer segment to identify if certain features really drive some customers to buy.

MaxDiff Research Limitations

As effective as MaxDiff research is, it does have one major limitation. You can’t look at different “levels” within features. For instance, let’s say you don’t just want to know how much flavor impacts a purchase decision. You also want to know if a particular flavor (e.g. cherry, apple, peach) improves preferences. With a MaxDiff, you cannot get to that deeper level.

However, with something called Conjoint analysis, you can. It’s an even more powerful product prioritization method that shows this extra level of nuance. However, it is more complex for researchers to implement and for participants to complete. As a result, it may be overkill depending on your research questions.

If you want to learn more, check out this post. It compares and contrasts MaxDiff and Conjoint research to see which one is best for you.